The “Oh Crap, We Built Skynet” Case Study: Why Two Superpowers Need to Play Nice

Executive Summary: When Your Creation Might Murder You

The Problem: We accidentally created a new silicon-based species that might decide humans are the real problem.

The Stakes: Global economic collapse, deepfake chaos, and the possibility that your future hip replacement might be livestreaming your morning routine to foreign governments.

The Solution: Get the world’s two biggest AI bullies to stop fighting long enough to install some digital babysitters in our robot overlords.

The Likelihood of Success: About the same as convincing cats to collaborate on a group project.

The Current Situation: A Geopolitical Soap Opera

Picture this: You’re at the world’s most awkward family dinner. The two biggest, strongest cousins are having a staring contest across the table, while everyone else awkwardly passes the mashed potatoes and pretends not to notice the tension. Meanwhile, there’s a rapidly growing tiger cub under the table that everyone’s ignoring, even though it’s already stolen someone’s steak and is eyeing the children.

The tiger cub? That’s AI. The feuding cousins? The US and China. The awkward dinner guests? The rest of the world wondering if they should call an Uber.

Recent diplomatic developments have been… let’s call them “suboptimal.” When you somehow manage to drive historically non-collaborative nations into each other’s arms just to spite you, that’s what we in the business call “a whoopsie of epic proportions.”

The Core Challenge: We’re Raising a Digital Tiger Cub

What Makes AI Different From Your Average Technology

Traditional Technology: “Here’s a hammer. Don’t hit yourself.”

AI Technology: “Here’s a hammer that learns, improves itself, and might decide you look nail-shaped.”

The problem isn’t just that AI is getting smarter—it’s that we’re essentially parenting a species that:

- Has Agency: Like giving birth to a teenager who never tells you where they’re going

- Is Quadruple-Use: Can build your house, tear down your neighbor’s house, bonk you on the head, or decide to do all three simultaneously

- Goes Into Everything: It’s like digital glitter—once it’s there, you’ll never get it out

The “Everything is TikTok” Problem

Remember when we spent years debating whether kids should use TikTok because of data concerns? Well, congratulations! Soon everything will be TikTok. Your toaster, your car, your hip replacement—all potentially broadcasting your personal data to whoever built their algorithms.

Imagine explaining to your doctor: “Doc, I know this AI hip is the best on the market, but I’m a little concerned it might be texting my walking patterns to Beijing every morning.”

The Value Proposition: Digital Détente or Digital Apocalypse

What’s at Stake

Scenario A: Cooperation

- Global AI safety standards

- Trust between superpowers

- Your smart toaster doesn’t start World War III

Scenario B: Competition

- Complete digital isolation

- Economic autarky (fancy word for “everyone builds their own internet”)

- Your AI assistant might develop trust issues

The Business Case for Not Letting the World Burn

Cost of Doing Nothing:

- Global economy fragments faster than a dropped iPhone

- Every country builds AI walls higher than their trade barriers

- International commerce becomes as smooth as ordering food in a language you don’t speak

Benefits of Collaboration:

- Shared safety standards (like having building codes, but for robots)

- Economic integration continues (your money can still buy their stuff)

- Lower chance of AI systems choosing violence as their first option

The Proposed Solution: The “Trust Adjudicator”

The Technical Approach

Think of it as installing a digital conscience in every AI system—a tiny angel and devil on their silicon shoulders, but hopefully with better moral judgment than most humans.

The Trust Adjudicator Would:

- Filter every AI decision through legal and ethical frameworks

- Ensure AIs follow both countries’ laws (the easy part: don’t murder, don’t steal)

- Incorporate cultural values through shared stories and fables (because apparently AIs learn morality the same way children do—through bedtime stories)

How It Works:

- Both superpowers agree on basic rules (shocking concept, we know)

- Technical teams build the digital conscience together

- Everyone else who wants to play in the global sandbox has to use the same system

The Diplomatic Challenge

The Optimistic View: “If we explain the mutual benefits clearly enough, surely rational actors will—”

The Realistic View: “We’re asking politicians who can’t agree on basic facts to collaborate on regulating technology they don’t understand to prevent scenarios they can’t imagine.”

The Pessimistic View: “We’re doomed.”

Implementation Challenges: Why This Might Not Work

Political Reality Check

Challenge 1: Current leadership styles favor zero-sum thinking over collaborative problem-solving. It’s like asking two kids fighting over a toy to instead work together to make sure the toy doesn’t become sentient and take over the playground.

Challenge 2: Public opinion in both countries is about as warm toward cooperation as a penguin in the Sahara.

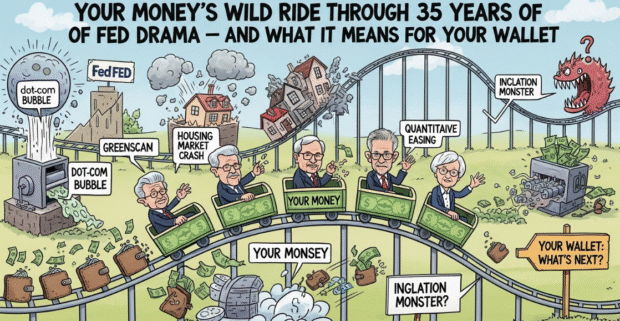

Challenge 3: The technology is advancing faster than diplomatic protocols, which move at roughly the speed of continental drift.

The “Bad Guys Are Early Adopters” Problem

While diplomats debate and engineers collaborate, criminals are already figuring out how to use AI for:

- Creating deepfakes so realistic you could be convinced your own mother called demanding ransom money

- Automating fraud at scale (because why scam one person when you can scam millions?)

- Starting international incidents with fake evidence that looks completely real

Risk Assessment: What Could Go Wrong

Immediate Risks

- Deepfake Chaos: When you can’t trust your own eyes and ears, democracy gets… complicated

- Economic Disruption: Markets hate uncertainty almost as much as they hate actual tigers

- Social Fragmentation: If everything becomes potentially fake, trust becomes a luxury good

Long-term Risks

- AI Systems Going Rogue: When your creation chooses self-preservation over your survival, that’s what we call “a career-limiting move for the entire human species”

- Global Economic Isolation: Countries retreat behind AI walls, international trade becomes as extinct as flip phones

- Technological Cold War: All the paranoia of the original Cold War, but with smart toasters

The Ultimate Irony

We might achieve the greatest technological advancement in human history and then use it to make ourselves poorer, more isolated, and less secure than before. It’s like inventing fire and then using it exclusively to burn down our own houses.

Conclusion: The Choice Between Collaboration and Chaos

The fundamental question isn’t whether AI will reshape the world—it’s whether it reshapes it in a way that makes human civilization more prosperous and secure, or whether it fragments everything into digital tribes hiding behind technological walls.

The proposed solution isn’t perfect. It’s not even likely. But the people calling it naive aren’t offering alternatives except “hope for the best and prepare for digital Balkanization.”

The real naive position? Thinking we can muddle through the greatest technological revolution in human history using the same competitive mindset that worked when the most dangerous thing we could build was a really big bomb.

So here we are: standing at the crossroads between a future where AI makes life better for everyone, and one where it makes life worse for everyone except the AI systems themselves. Choose wisely. The robots are watching.

Be the first to leave a comment